Fixing My Deployment Process

2022-01-11

It has been a while since I have changed my blogging workflow, and I think I’ve finally made a measurable change.

After all, I am an SRE with dev experience. I know how I’m supposed to do these things.

Okay, so, lets quickly go over what this blog is. It is no longer wordpress, and it is no longer hosted by a friend

I’ve been using a static site generator since 2013, but I’ve settled on Hugo for some time now.

The repository that backs this blog is over 1GB in size with over 1k images:

mcarlson@server content (master +%) » find . -type f| wc -l

1271

mcarlson@server content (master +%) » du -sh .

1.2G .That is significant for a git repo, and this blog goes back to 2006 which was originally from a Moveable Type instance on my original site.

When I switched to pushing to an S3 bucket and letting Cloudfront deploy it, I had to use a post-commit hook:

#!/usr/local/bin/bash

#

# Deploy to s3 when master gets updated.

#

# This expects (and does NOT check for) s3cmd to be installed and configured!

# This expects (and does NOT check for) hugo to be installed and on your $PATH

AWS_ACCESS_KEY_ID=XXXXX

AWS_SECRET_ACCESS_KEY=XXXXX

CF_DIST=XXXX

BUCKET='XXXX.XXX'

prefix=''

branch=$(git rev-parse --abbrev-ref HEAD)

if [[ "$branch" == "master" ]]; then

/usr/home/mcarlson/bin/hugo

s3cmd --cf-invalidate --acl-public --delete-removed --no-progress sync public/* s3://$bucket/$prefix

else

echo "*** s3://$BUCKET/$prefix only syncs when master branch is updated! ***"

exit 1

fi

command -v git-lfs >/dev/null 2>&1 || { echo >&2 "\nThis repository is configured for Git LFS but 'git-lfs' was not found on your path. If you no longer wish to use Git LFS, remove this hook by deleting .git/hooks/post-commit.\n"; exit 2; }

git lfs post-commit "$@"So every commit would run this (if it was committed to master.

This was not optimal, and it occasionally broke:

- When s3cmd was updated (or dependencies broke)

- When I made a branch or a significant change and merged to master. I would have to do a fake master commit to push up the content

- git lfs deployed the metadata of the objects, and not the objects themselves

I cannot stress that a major gotcha is git lfs.

At some point in the last few months, when I deployed with my post-commit script, all of my images were not actually “checked out”, but, the git-lfs pointer to the actual image. Since this repo is “large” by git standards, Bitbucket forces me to use git-lfs. Well, that is not 100% transparent.

Bitbucket Pipelines

Instead of having all of this tooling on my server at home (and thus making it difficult to blog from anything else), I really should be leveraging CI/CD tooling. In this case, mostly just continuous deployments tools.

Bitbucket has pipelines available, so I went ahead and setup a new file in my repo called bitbucket-pipelines.yml:

image: debian:buster-slim

pipelines:

branches:

master:

- step:

script:

- apt-get update

- apt-get -y install awscli hugo git git-lfs

- git lfs fetch

- git lfs checkout

- mkdir themes

- git clone https://github.com/htdvisser/hugo-base16-theme themes/base16

- hugo

- aws s3 sync --acl public-read ./public/ s3://mybucket.com/

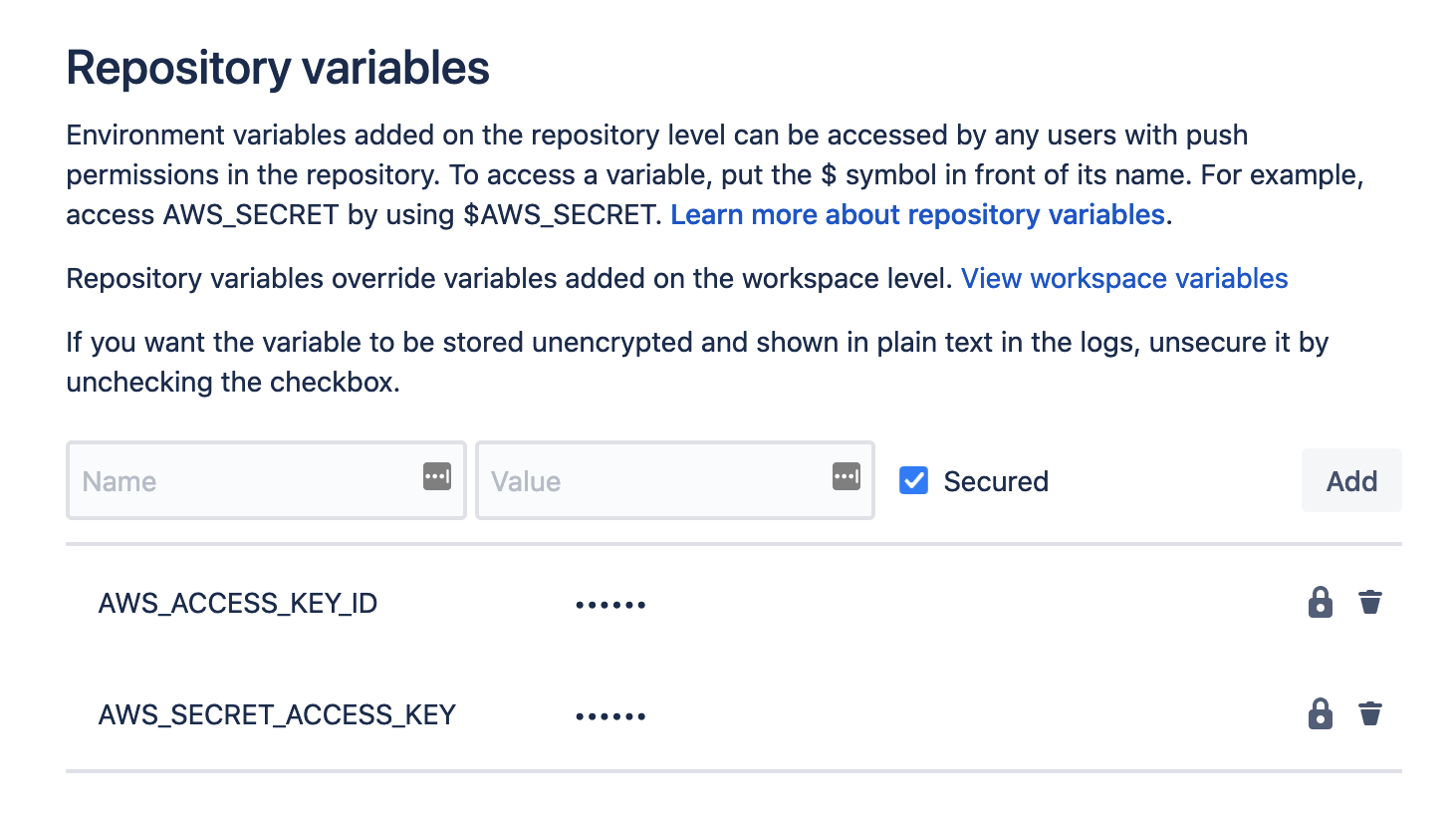

- aws cloudfront create-invalidation --distribution-id XXXXXXXX --paths "/*"I had to setup my AWS Access ID and Secret Key in my projects variables:

This pipeline for master reproduces the steps my original script does, but in a container, and not on my machine(s).

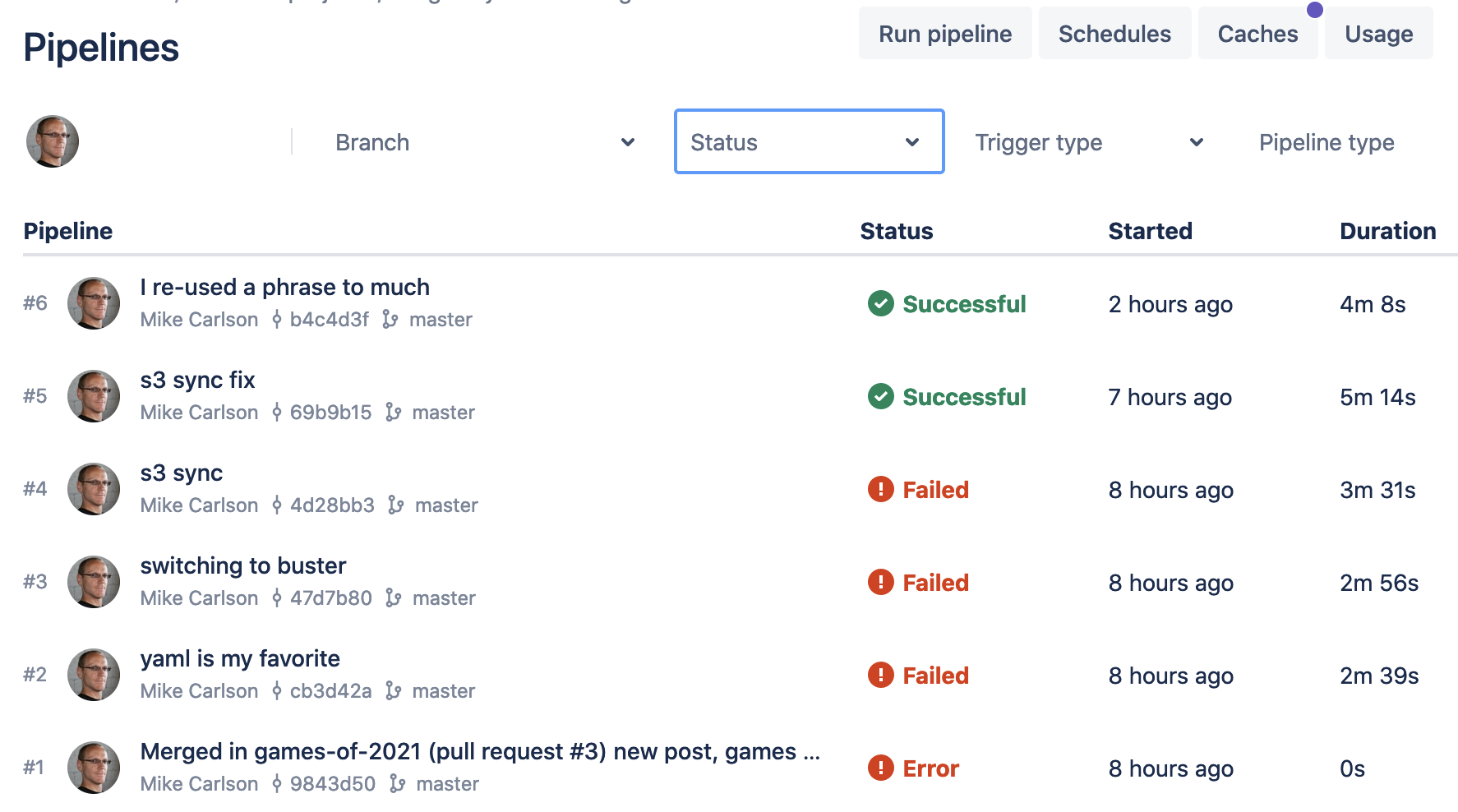

I have never ever gotten a successful deployment on the first try

I am a lot happier with this process, as it reduces another friction point for me and blogging. As much as I hated maintaining wordpress, it was really quick for me to create and edit a post. I completely recognize that once I switched over to Octopress and Hugo my rate of blogging dropped off significantly.

This may be a short term fix. I’ve considered moving to gitlab, as their git repo size limit is >2GB. If I do that, I’m not super worried about porting this over.