10g and 2.5g Networking

2024-02-26

This post is a combination of a lot of little things that I have been working on over the last few months. This kind of goes all over the place, it is not ADHD related and I’m not having a manic moment. It just takes a lot of effort to do a post like this and I wanted to cover all of it at once.

Two things prompted all of this:

- Caralyne upgraded her computer, with her own funds, for the very first time. She finally doesn’t have a full blown hand me down system

- I wanted to know why my kubernetes pods and subsequent VM’s were not writing faster to their local storage

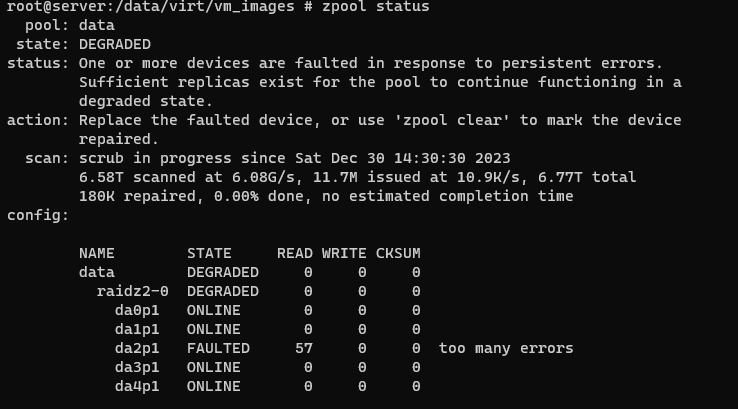

Oh, other things happened as well. I lost power suddenly multiple times in a week and a drive failed in my ZFS pool :/

First, we had a snow and ice storm. Power went out one late night, around 1am

Second, I was (as a non professional electrician) futzing with our front porch switch. Its tied to the porch and garage lights and I wanted to see if they could be split. Well, it turns out, its on the same circuit as my internet and “infrastructure” devices. I caused a short and they all lost power

Third, our dog groomer who comes to the house said she had to plug in. She did, and tripped the breaker. The same one all of my internet and stuff is.

So, I knew I had to crack open my server to replace a drive. Back to Caralynes new system though, it came with 2.5Gb ethernet on-board.

Well, I cannot let that go to waste!

I have been eyeing >1Gb home ethernet ever since I had the chance to setup a beefy (at the time) ZFS Fibre Channel array with a 10Gb Intel Nic. At work, I’ve had multiple opportunities to setup and work with 10Gb and greater networking devices, and for the last 10 years I have been grumpily asking why 10gb home networking was still so expensive

That has recently changed, and I can now get 2.5Gb and 10Gb switches for less than $100. They are unmanaged of course, but I don’t demand that level of manageability here at Carlson Enterprises. We value your dollar and business.

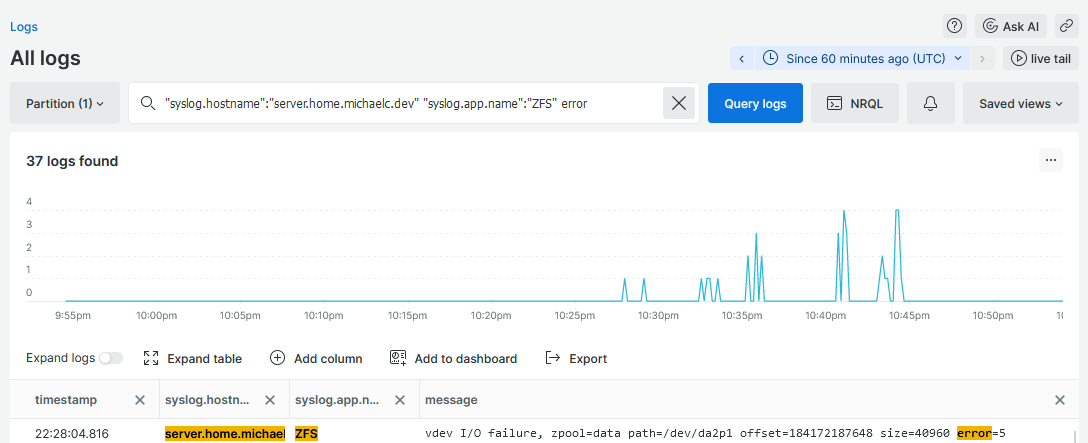

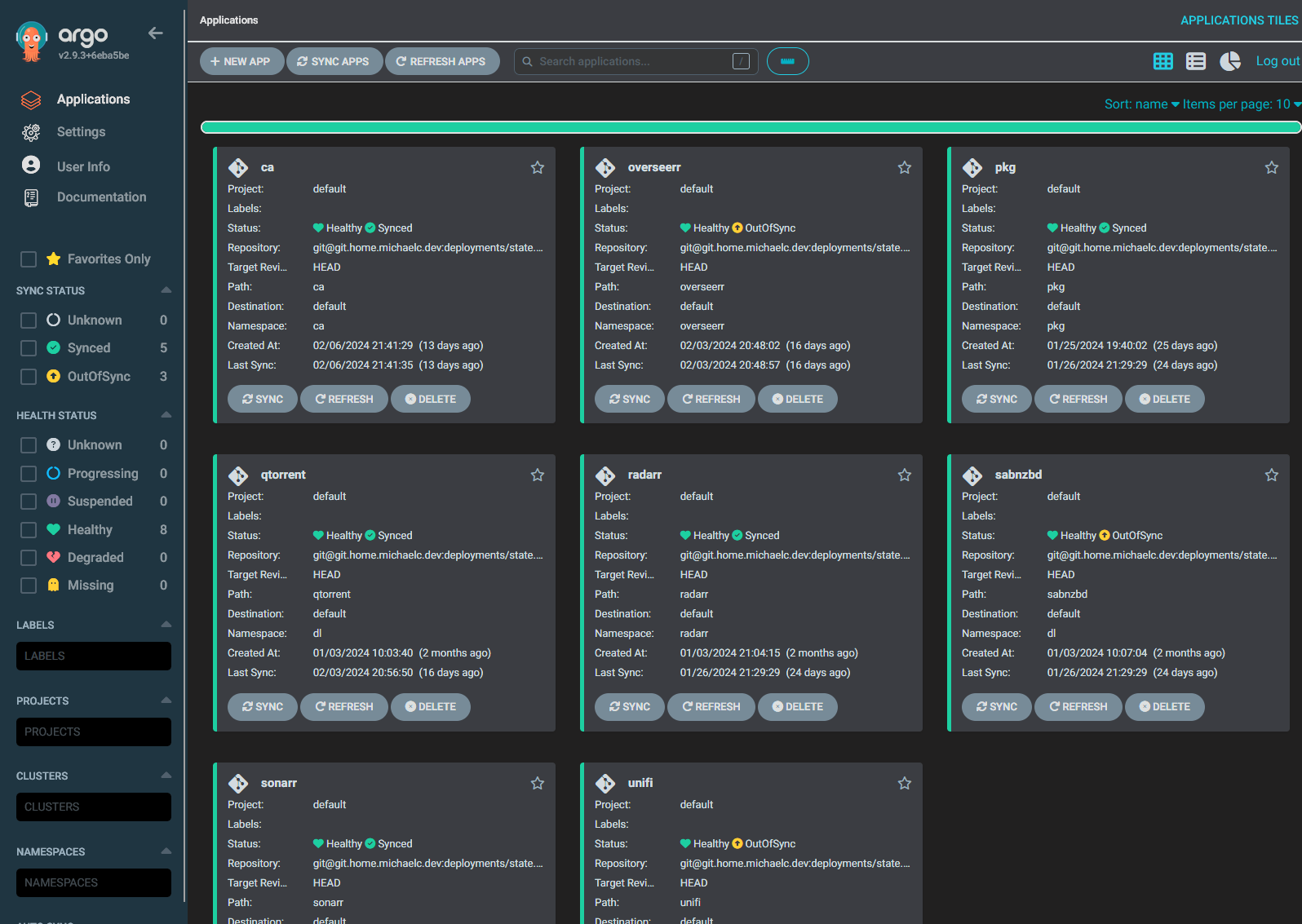

Kubernetes Stuff

So, what exactly do we got going on here?

A Bunch of dashboards thats what!

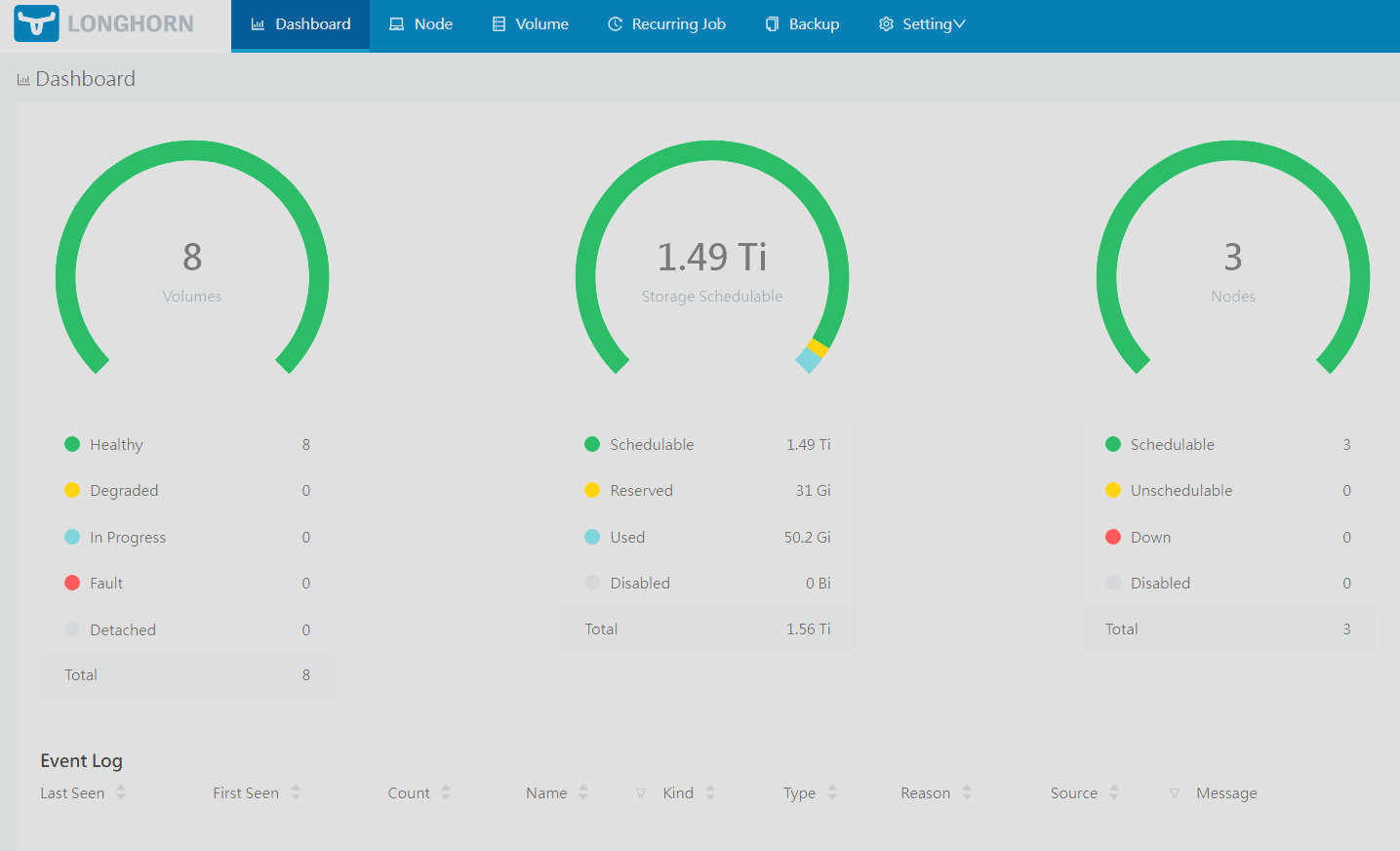

There are a lot of new services running here. Once I got kubernetes setup, it was like opening the floodgates. I started to move everything I had into my little 3 node setup. Honestly, I’m super happy with it all. I like my mix of longhorn for persistent storage, and mapping in nfs mounts to my large local ZFS pool.

Here a glance:

ArgoCD

Longhorn, my new favorite object storage:

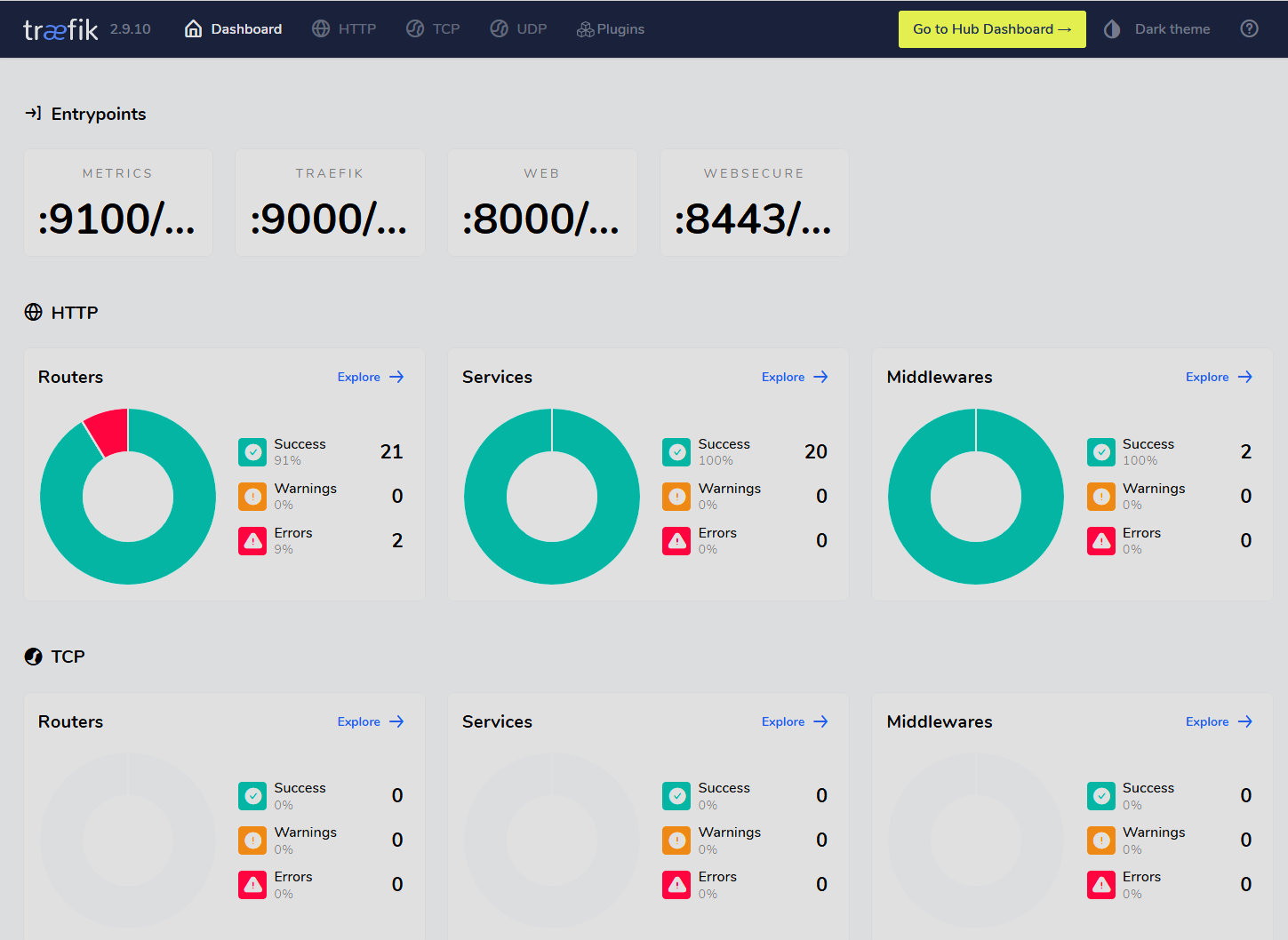

Rancher uses Traefik by default, so I got that setup:

I briefly installed the New Relic nri-bundle but with a free account I quickly exceeded my ingest quota and removed it. Its fine, I don’t need that level of observability when most of my deployments are single pods.

Before

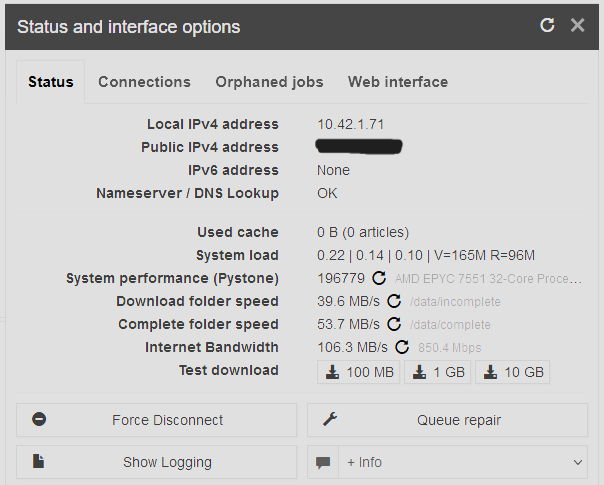

Anywho… I did a little benchmark that sabnzbd comes with, and it was writing at 11MB/sec

Seems really low. Lets verify what my maximum write speeds can be:

root@server:/data/radarr/usenet # dd if=/dev/zero of=test.dat bs=8m count=1000

1000+0 records in

1000+0 records out

8388608000 bytes transferred in 2.984002 secs (2811193584 bytes/sec)That 2.81GB/sec local writes. That is not unexpected, I have 128GB of ram and 5 drives in a raidz2 that are capable of 600MB/sec.

So, lets test a vm:

[root@389-1 usenet]# dd if=/dev/zero of=test.dat bs=1024k count=1000 ; \rm test.dat

1000+0 records in

1000+0 records out

1048576000 bytes (1.0 GB, 1000 MiB) copied, 90.1188 s, 11.6 MB/souch…

Okay so lets start testing the local network

iperf3 -s 1 ↵

-----------------------------------------------------------

Server listening on 5201 (test #1)

-----------------------------------------------------------

Accepted connection from 192.168.1.114, port 15741

[ 5] local 192.168.1.15 port 5201 connected to 192.168.1.114 port 64085

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 11.1 MBytes 92.9 Mbits/sec

[ 5] 1.00-2.00 sec 11.1 MBytes 93.6 Mbits/sec

[ 5] 2.00-3.01 sec 11.2 MBytes 93.9 Mbits/sec

[ 5] 3.01-4.00 sec 11.1 MBytes 93.8 Mbits/sec

[ 5] 4.00-5.00 sec 11.1 MBytes 93.0 Mbits/sec

[ 5] 5.00-6.01 sec 11.2 MBytes 94.2 Mbits/sec

[ 5] 6.01-7.00 sec 11.1 MBytes 93.7 Mbits/sec

[ 5] 7.00-8.01 sec 11.2 MBytes 93.8 Mbits/sec

[ 5] 8.01-9.00 sec 11.0 MBytes 92.8 Mbits/sec

[ 5] 9.00-10.00 sec 11.2 MBytes 94.1 Mbits/sec

[ 5] 10.00-10.07 sec 768 KBytes 91.7 Mbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.07 sec 112 MBytes 93.6 Mbits/sec receiver

-----------------------------------------------------------So, performance is mostly not great.

I was leaning towards a few things:

- bhyve is still fairly new and while functional, it is entirely possible it is not as performant as other hypervisors

- The virtio network drivers are not optimal

- VM’s are using a tap interface on a 1GB interface and inherently limited by that

So, lets get some new hardware and see if that has any impact!

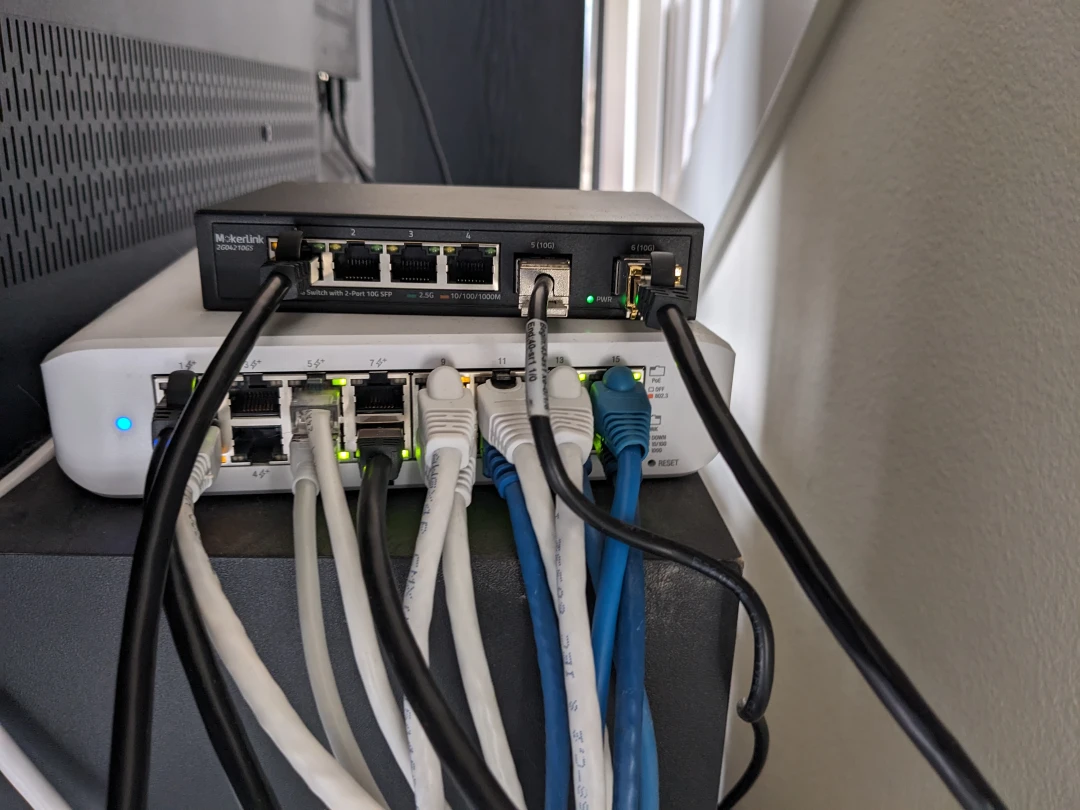

Hardware

I bought:

- 1 Mellanox 10Gb adapter MCX311A

- 1 Mokerlink 4 x 2.5Gb, 2 x 10Gb

- 1 Mokerlink 8 x 2.5Gb, 1 x 10Gb

My rational for this was to get a 10Gb card for my server, since FreeBSD has really good 1/10/40/100Gb ethernet support, but 2.5Gb less so. I think there is one Intel chip that works reliably.

This is why I got 1 switch with 4 2.5Gbe ports and 2 10Gbe ports. The FreeBSD server will use a copper connector, while the other 10Gbe connection will be the interlink between the two rooms.

The office gets the other switch with the 1 10Gbe as the interlink, and Caralyne’s desktop will bet a 2.5Gb port. I can decided to upgrade to 2.5Gbe for the other desktops in the future.

Install

This also allowed me to replace the failed drive in my zfs pool

I love my moccasin slippers

And how did that drive replacement go? First was identifying it. I was able to pull out the dev handle and serial number from my shipped logs to NR One.

root@server:~ > dmesg | grep '^da[0-9]'

da1 at mps0 bus 0 scbus1 target 11 lun 0

da1: <ATA HGST HDN724030AL A5E0> Fixed Direct Access SPC-4 SCSI device

da1: Serial Number PK2234P9JSYALY

da1: 600.000MB/s transfers

da1: Command Queueing enabled

da1: 2861588MB (5860533168 512 byte sectors)

da0 at mps0 bus 0 scbus1 target 10 lun 0

da0: <ATA HGST HDN724030AL A5E0> Fixed Direct Access SPC-4 SCSI device

da0: Serial Number PK2234P9J58SHY

da0: 600.000MB/s transfers

da0: Command Queueing enabled

da0: 2861588MB (5860533168 512 byte sectors)

da4 at mps0 bus 0 scbus1 target 15 lun 0

da4: <ATA HGST HDN724030AL A5E0> Fixed Direct Access SPC-4 SCSI device

da4: Serial Number PK2234P9K5MYAY

da4: 600.000MB/s transfers

da4: Command Queueing enabled

da4: 2861588MB (5860533168 512 byte sectors)

da3 at mps0 bus 0 scbus1 target 14 lun 0

da3: <ATA HGST HDN724030AL A5E0> Fixed Direct Access SPC-4 SCSI device

da3: Serial Number PK2238P3G4W13J

da3: 600.000MB/s transfers

da3: Command Queueing enabled

da3: 2861588MB (5860533168 512 byte sectors)

da2 at mps0 bus 0 scbus1 target 13 lun 0

da2: <ATA HGST HDN724030AL A5E0> Fixed Direct Access SPC-4 SCSI device

da2: Serial Number PK1234P9K7JREX

da2: 600.000MB/s transfers

da2: Command Queueing enabled

da2: 2861588MB (5860533168 512 byte sectors)Then, formatting and preparing the new replacement drive:

root@server:~ > gpart create -s gpt da4

da4 created

root@server:~ > gpart add -t freebsd-zfs -a 4K da4

da4p1 addedThen replacing with zpool replace

root@server:~ > zpool replace data da2p1 diskid/DISK-PK2234P9K5MYAYp1And watch the status

pool: data

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Wed Jan 17 10:57:57 2024

3.41G scanned at 436M/s, 177M issued at 22.1M/s, 7.20T total

0B resilvered, 0.00% done, 3 days 22:52:35 to go

config:

NAME STATE READ WRITE CKSUM

data DEGRADED 0 0 0

raidz2-0 DEGRADED 0 0 0

da0p1 ONLINE 0 0 0

da1p1 ONLINE 0 0 0

replacing-2 DEGRADED 0 0 0

da2p1 OFFLINE 0 0 0

diskid/DISK-PK2234P9K5MYAYp1 ONLINE 0 0 0

diskid/DISK-PK1234P9K7JREXp1 ONLINE 0 0 0

diskid/DISK-PK2238P3G4W13Jp1 ONLINE 0 0 0With that out of the way, back to network stuff:

pciconf output:

mlx4_core0@pci0:35:0:0: class=0x020000 rev=0x00 hdr=0x00 vendor=0x15b3 device=0x1003 subvendor=0x15b3 subdevice=0x0055

vendor = 'Mellanox Technologies'

device = 'MT27500 Family [ConnectX-3]'

class = network

subclass = ethernet

cap 01[40] = powerspec 3 supports D0 D3 current D0

cap 03[48] = VPD

cap 11[9c] = MSI-X supports 128 messages, enabled

Table in map 0x10[0x7c000], PBA in map 0x10[0x7d000]

cap 10[60] = PCI-Express 2 endpoint max data 256(256)

max read 512

link x4(x4) speed 8.0(8.0) ASPM disabled(L0s)

ecap 000e[100] = ARI 1

ecap 0003[148] = Serial 1 e41d2d0300256c20

ecap 0001[154] = AER 2 0 fatal 0 non-fatal 2 corrected

ecap 0019[18c] = PCIe Sec 1 lane errors 0I also did some additional FreeBSD tuning:

loader.conf:

mlx4en_load="YES"

cc_cubic_load="YES"

cc_dctcp_load="YES"rc.conf:

ifconfig_mlxen0="inet 192.168.1.15/24 -tso4 -tso6 -lro -vlanhwtso"sysctl.conf:

kern.maxprocperuid=234306

net.link.tap.up_on_open=1

net.inet.ip.intr_queue_maxlen=2048

kern.ipc.maxsockbuf=16777216

net.inet.tcp.recvbuf_max=4194304

net.inet.tcp.recvspace=4192304

net.inet.tcp.sendbuf_inc=32768

net.inet.tcp.sendbuf_max=16777216

net.inet.tcp.sendspace=2097152

so many cat8 cables

my desk has more blinky lights

switches in the other room. Cats usually enjoy this spot, because its warm

After

back to iperf

Re-testing a VM, after I reconfigured bhyve’s network to use the 10Gb interface

root@git-1:~ > iperf3 -c 192.168.1.15

Connecting to host 192.168.1.15, port 5201

[ 5] local 192.168.1.114 port 51410 connected to 192.168.1.15 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.03 sec 210 MBytes 1.71 Gbits/sec 0 1.61 MBytes

[ 5] 1.03-2.06 sec 211 MBytes 1.71 Gbits/sec 0 1.61 MBytes

[ 5] 2.06-3.01 sec 191 MBytes 1.69 Gbits/sec 0 1.61 MBytes

[ 5] 3.01-4.03 sec 204 MBytes 1.68 Gbits/sec 0 1.61 MBytes

[ 5] 4.03-5.03 sec 210 MBytes 1.76 Gbits/sec 0 1.61 MBytes

[ 5] 5.03-6.02 sec 205 MBytes 1.74 Gbits/sec 0 1.61 MBytes

[ 5] 6.02-7.02 sec 211 MBytes 1.77 Gbits/sec 0 1.61 MBytes

[ 5] 7.02-8.00 sec 219 MBytes 1.86 Gbits/sec 0 1.61 MBytes

[ 5] 8.00-9.02 sec 223 MBytes 1.84 Gbits/sec 0 1.61 MBytes

[ 5] 9.02-10.02 sec 216 MBytes 1.81 Gbits/sec 0 1.61 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.02 sec 2.05 GBytes 1.76 Gbits/sec 0 sender

[ 5] 0.00-10.03 sec 2.05 GBytes 1.76 Gbits/sec receiverNow a dd test

[root@docker-1 usenet]> dd if=/dev/zero of=test.dat bs=1024k count=1000 ; \rm test.dat

1000+0 records in

1000+0 records out

1048576000 bytes (1.0 GB, 1000 MiB) copied, 4.71894 s, 222 MB/s

[root@docker-1 usenet]>Now, inside a container:

root@sabnzbd-678cf675b4-6kr9m:/data# dd if=/dev/zero of=test.dat bs=1024k count=1000 ; \rm test.dat

1000+0 records in

1000+0 records out

1048576000 bytes (1.0 GB, 1000 MiB) copied, 5.44275 s, 193 MB/sHowever, sabnzbd only manages 30-40MB/s

So while and improvement, still not peak write performance. I should test with a VM and not a k8s deployment, and then look at how sabnzbd writes data.

Finally, I have the output of caralynes new fancy 2.5Gbe test:

-----------------------------------------------------------

Server listening on 5201 (test #2)

-----------------------------------------------------------

Accepted connection from 192.168.1.30, port 55688

[ 5] local 192.168.1.15 port 5201 connected to 192.168.1.30 port 55689

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 282 MBytes 2.36 Gbits/sec

[ 5] 1.00-2.00 sec 283 MBytes 2.37 Gbits/sec

[ 5] 2.00-3.00 sec 284 MBytes 2.37 Gbits/sec

[ 5] 3.00-4.01 sec 285 MBytes 2.37 Gbits/sec

[ 5] 4.01-5.03 sec 288 MBytes 2.37 Gbits/sec

[ 5] 5.03-6.01 sec 278 MBytes 2.37 Gbits/sec

[ 5] 6.01-7.00 sec 281 MBytes 2.37 Gbits/sec

[ 5] 7.00-8.01 sec 284 MBytes 2.36 Gbits/sec

[ 5] 8.01-9.00 sec 280 MBytes 2.37 Gbits/sec

[ 5] 9.00-10.00 sec 283 MBytes 2.37 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -Not bad! This is between rooms, the front office and the server switch. I ran and crimped the cables and keystones myself with cat6a, so thats pretty cool it at least performs that well

Conclusions for Now

Of all of this, one very important BIOS setting I forgot to investigate was SR-IOV. Basically enabling virtualization support for the Mellanox nic, for my hypervisor. Well, after a BIOS and Redfish update and enabling both SR-IOV in the BIOS and in the Mellanox firmware I get zero SR-IOV Ready kernel messages:

pciconf -lvbc mlx4_core0

mlx4_core0@pci0:35:0:0: class=0x020000 rev=0x00 hdr=0x00 vendor=0x15b3 device=0x1003 subvendor=0x15b3 subdevice=0x0055

vendor = 'Mellanox Technologies'

device = 'MT27500 Family [ConnectX-3]'

class = network

subclass = ethernet

bar [10] = type Memory, range 64, base 0xebc00000, size 1048576, enabled

bar [18] = type Prefetchable Memory, range 64, base 0x191c7800000, size 8388608, enabled

cap 01[40] = powerspec 3 supports D0 D3 current D0

cap 03[48] = VPD

cap 11[9c] = MSI-X supports 128 messages, enabled

Table in map 0x10[0x7c000], PBA in map 0x10[0x7d000]

cap 10[60] = PCI-Express 2 endpoint max data 256(256)

max read 512

link x4(x4) speed 8.0(8.0) ASPM disabled(L0s)

ecap 000e[100] = ARI 1

ecap 0003[148] = Serial 1 e41d2d0300256c20

ecap 0001[154] = AER 2 0 fatal 0 non-fatal 1 corrected

ecap 0019[18c] = PCIe Sec 1 lane errors 0I know with iovctl in FreeBSD, you can set this up with a supported nic. AFAIK however, I think only Chelsio and Intel drivers support this. I’ll have to update my BIOS settings and see if mlxen4 can utilize this feature.

I might pick up a cheap(ish) chelsio card on ebay, just to test this out. Maybe I’ll put the mellanox in my desktop for fun.

Also, since you’re all the way down at the bottom, I did get a UPS for my server and networking devices. Hopefully just enough to prevent these little outages.