New ZFS Pool

2022-05-23

I was fortunate enough to get some free drives from someone at work. They live in Austin, and offered up a big heavy box of hard drives to anyone who would pay for shipping. I’ve had a very simple ZFS pool for around 5 years now, composed of 3 2TB drives in a raidz1 with a cold spare.

There have been no issues, I scrub them monthly, however ever since I placed my /home volume onto the ZFS pool, I noticed some large delays when working with a git repo.

So, this was a nice upgrade. I’m still using spinning rust, and I’d like to at least move my home directory pool to a few SSD’s instead of large traditional HDD’s, but this is a good upgrade for now.

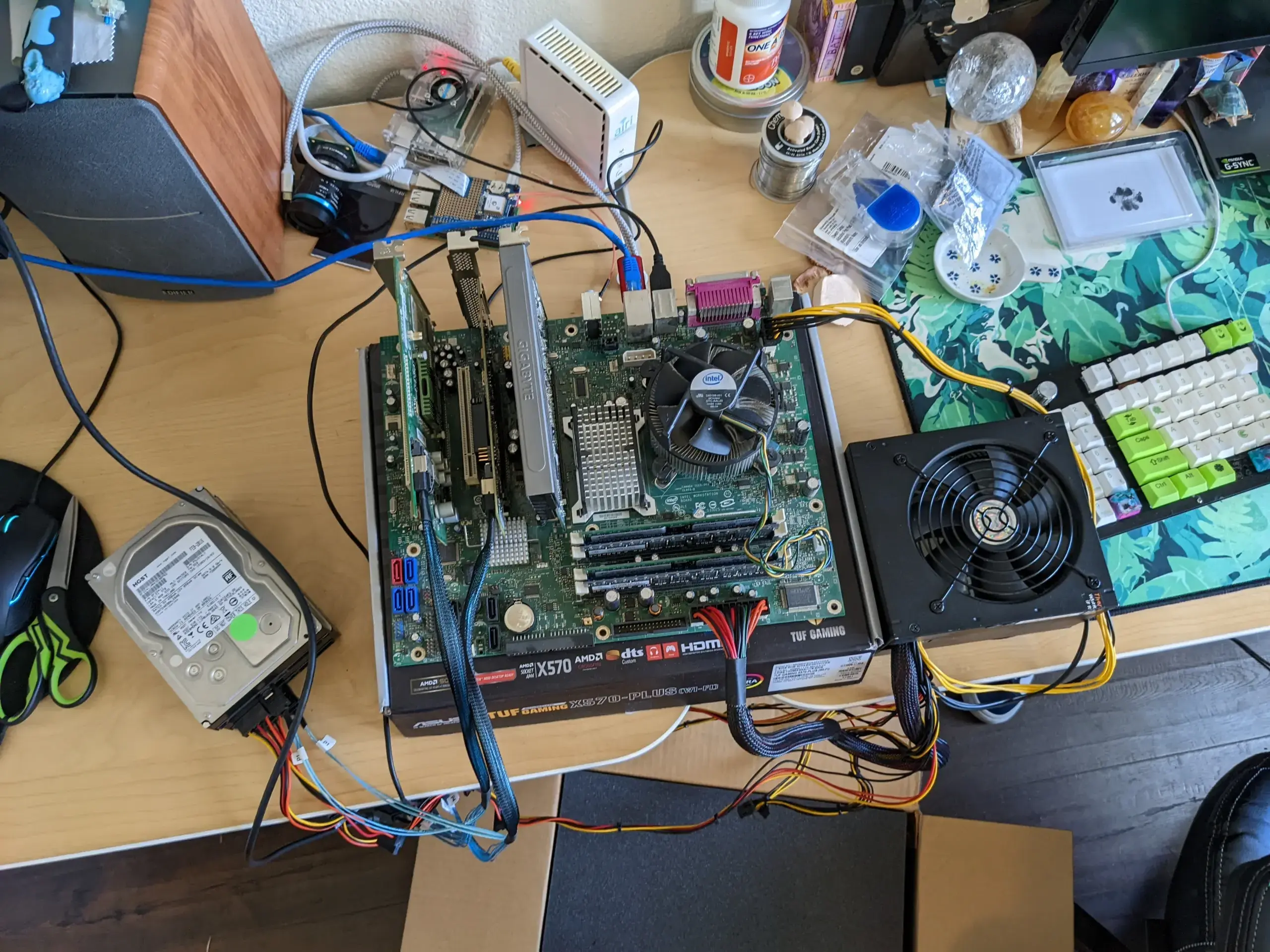

I didn’t want to take down my server, because I don’t have enough space for two zpool’s (more on that…), so I setup a temporary system to create the new pool, and do a zfs send | recv between both hosts.

I had successfully taken over the shared space between Caralyne and I, under classic eminent domain

This temp build was a little tricky. I only have two spare motherboards, a very old intel workstation board, and a really slim dell board. I needed at least 1 pci-e 8x slot of the HBA I wanted to use. I also needed enough sata and sata power connectors from a power supply. I have given away some components to help other people build PC’s during the pandemic and chip shortage so this was a little challenging.

In the end, I used the old intel board, and I was able to frankenstein a power supply.

This system required a screwdriver to boot, and sometimes it didn't

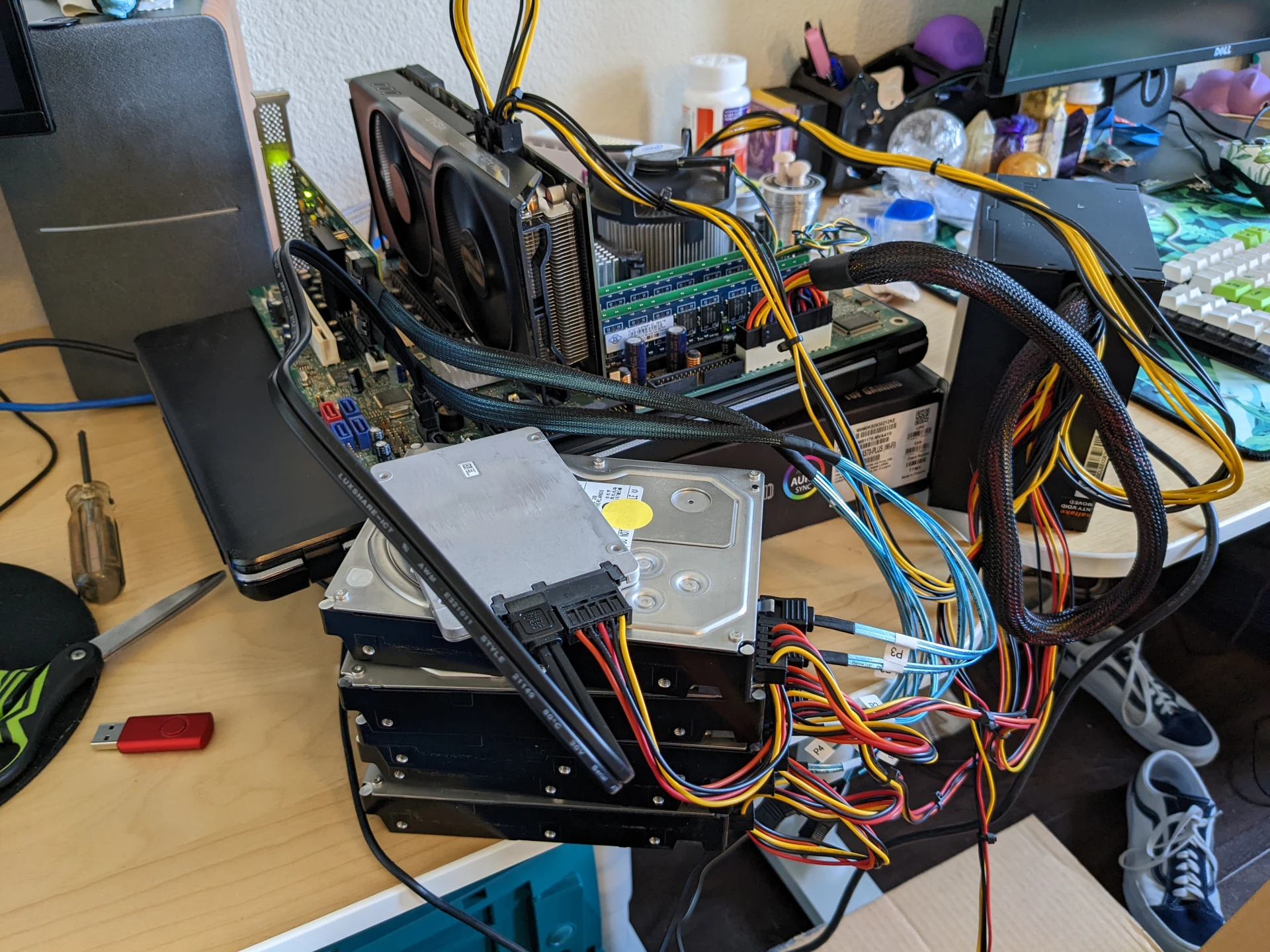

One of the only spare video cards I have is a nvidia 970, which also required additional power, which also made booting up all of these drives a bit spotty. Its not the only card I have that works and is compatible with my new monitor, otherwise I couldnt initiate the install process.

5 drives all setup, and I got a temp FreeBSD install on the SSD there. Time to build the array.

What is different from my other zpool, is I’m using a real HBA now. I had used the onboard sata controller for a few years now. Not too long ago though, I picked up an LSI based controller on ebay for the cheap. I had to buy the cable though.

Controller:

root@builder:~ # mpsutil show adapter

mps0 Adapter:

Board Name: SAS9211-8i

Board Assembly: H3-25250-02F

Chip Name: LSISAS2008

Chip Revision: ALL

BIOS Revision: 7.27.00.00

Firmware Revision: 14.00.00.00

Integrated RAID: yes

PhyNum CtlrHandle DevHandle Disabled Speed Min Max Device

0 N 1.5 6.0 SAS Initiator

1 N 1.5 6.0 SAS Initiator

2 0001 0009 N 6.0 1.5 6.0 SAS Initiator

3 0002 000a N 6.0 1.5 6.0 SAS Initiator

4 N 1.5 6.0 SAS Initiator

5 0003 000b N 6.0 1.5 6.0 SAS Initiator

6 0004 000c N 6.0 1.5 6.0 SAS Initiator

7 0005 000d N 6.0 1.5 6.0 SAS InitiatorI really should update the firmware, its very old. It’s also working so… ¯\(ツ)/¯

gpart and zfs creation:

root@builder:~ # gpart create -s gpt da0

da0 created

root@builder:~ # gpart add -t freebsd-zfs -a 4k da0

da0p1 added

root@builder:~ # gpart create -s gpt da1

da1 created

root@builder:~ # gpart add -t freebsd-zfs -a 4k da1

da1p1 added

root@builder:~ # gpart create -s gpt da2

da2 created

root@builder:~ # gpart add -t freebsd-zfs -a 4k da2

da2p1 added

root@builder:~ # gpart create -s gpt da3

da3 created

root@builder:~ # gpart add -t freebsd-zfs -a 4k da3

da3p1 added

root@builder:~ # gpart create -s gpt da4

da4 created

root@builder:~ # gpart add -t freebsd-zfs -a 4k da4

da4p1 added

root@builder:~ # zpool create data raidz2 da0p1 da1p1 da2p1 da3p1 da4p1

root@builder:~ # zfs list

NAME USED AVAIL REFER MOUNTPOINT

data 639K 7.82T 170K /data

root@builder:~ # df -h

Filesystem Size Used Avail Capacity Mounted on

/dev/ada0s1a 105G 4.8G 91G 5% /

devfs 1.0K 1.0K 0B 100% /dev

data 7.8T 171K 7.8T 0% /dataI had to look up how to set 4k sector sizes in FreeBSD 13.0-RELEASE vs how I used to (with geom/gnop). I like how it is done now, with the -a 4k option. I did verify with zdb that ASHIFT was 12:

root@builder:/data # zdb -C|grep ashift

ashift: 12I also went with a raidz2. I’m not so much running out of storage that I need the extra TB’s, so the more parity the better.

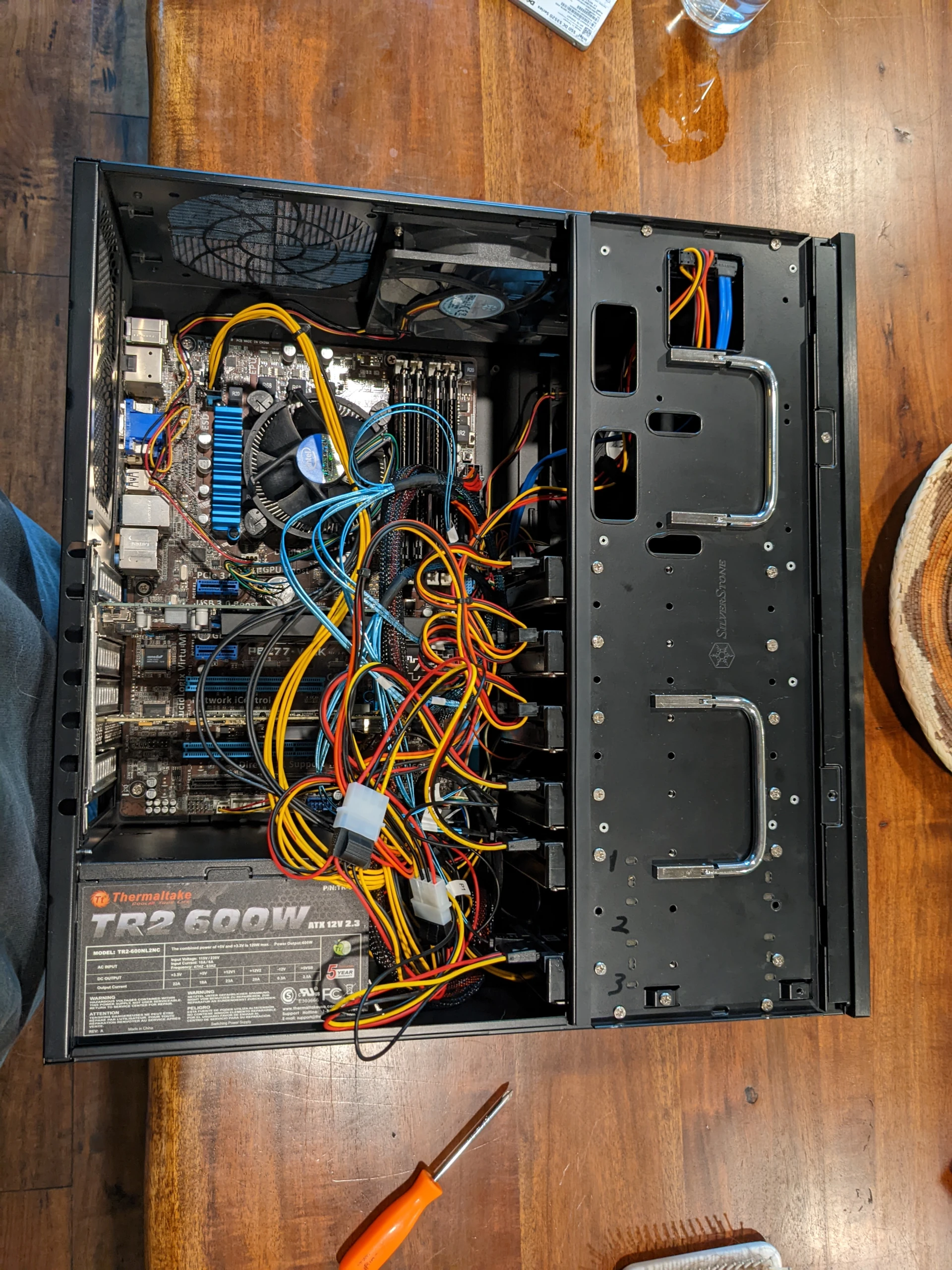

First attempt to install the drives, after a successful series of zfs send/recv

POST-ing. I have to keep a keyboard plugged in or else the system never boots up

I tried installing ONE more drive, and it was a failure. First, I didn't have enough sata power cables, so I ordered molex to sata power converters. Well, I got the wrong kind (male vs female), so I bought a new set. The system just wouldn't power on with that 7th HDD installed. It sounded like an old car trying to start. I had to tear it all down and re-arrange the drives for the 4th time

I’ll hold off getting a new power supply to see if that will get the 7th drive added and successfully POST-ing. I have a good amount of storage now, even after copying all of my data. Performance is a lot nicer, working with git and everything in my home directory with my zsh helpers isn’t starving for i/o resources.

mcarlson@server Video/Movies » zpool status

pool: data

state: ONLINE

scan: scrub repaired 0B in 04:20:11 with 0 errors on Sun May 1 04:20:11 2022

config:

NAME STATE READ WRITE CKSUM

data ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

da0p1 ONLINE 0 0 0

da1p1 ONLINE 0 0 0

da2p1 ONLINE 0 0 0

da3p1 ONLINE 0 0 0

da4p1 ONLINE 0 0 0

errors: No known data errors

mcarlson@server Video/Movies » zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

data 13.6T 6.16T 7.48T - - 1% 45% 1.00x ONLINE -

mcarlson@server Video/Movies »