xen and convirture

2012-04-10

My previous experience with a large virtual “enterprise” environment was with VMWare’s ESXi and vSphere.

Performance wise, I was always fairly happy with ESXi knowing that it was a virtual machine of course. There were a few issues I recall:

- silent data corruption (fsck’ing Linux and FreeBSD volumes would reveal inconsistent filesystem information, but there were never any errors reported to the VM)

- Live migrations were not always stable. In fact, most of the time it would result in an unexpected shutdown

I doubt it was solely VMWares fault, as it could have been a series of mis-configurations and poor implementation descisions. Again, that is not a black mark against VMWare, it is just part of my own personal user experience.

What IS a black mark against VMWare is how expensive their product is, and I found out recently that version 5 now charges by how much memory your servers have (and not by processor socket, which is how I think it used to be). vRAM as they like to call it.

This is a problem in our environment, as memory is really valuable in a virtualized environment; shared disk subsysterms will ALWAYS be an order of magnitude slower than having dedicated disks. By allowing the OS’s virtual memory layer to do all of the caching in RAM, we can load up a hypervisor server with as much ram as reasonably priced and give a VM a few gig’s to work with.

That is what we did at Bay Photo Lab :)

We spec’d two servers with 4 AMD Opteron 6220 processors, each with 128GB of system memory each.

That gave us 64 cores, and ~254GB of usable memory (Dom0 uses ~2GB). The price point for using AMD over Intel was too much to pass up. We were able to get double the amount of resources for nearly half the price.

Rob had already built up a nice GlusterFS cluster, so we use the Gluster FUSE client on our Xen servers to access the shared storage. This works out nicely, it isn’t the fastest storage (currently we are using a 1Gb network), however, we have the option to upgrade various points like moving to InfiniBand or 10Gb ethernet.

Before this new environment, we were manually creating a Xen configuration file. This is OK for maybe Rob and I. This is not OK for anyone else though, so I started looking for a user interface.

The simple route was to install Virt Manager, VNC Server (or use SSH tunneling). That still would only work for Rob and myself, as access to the Xen servers should be limited.

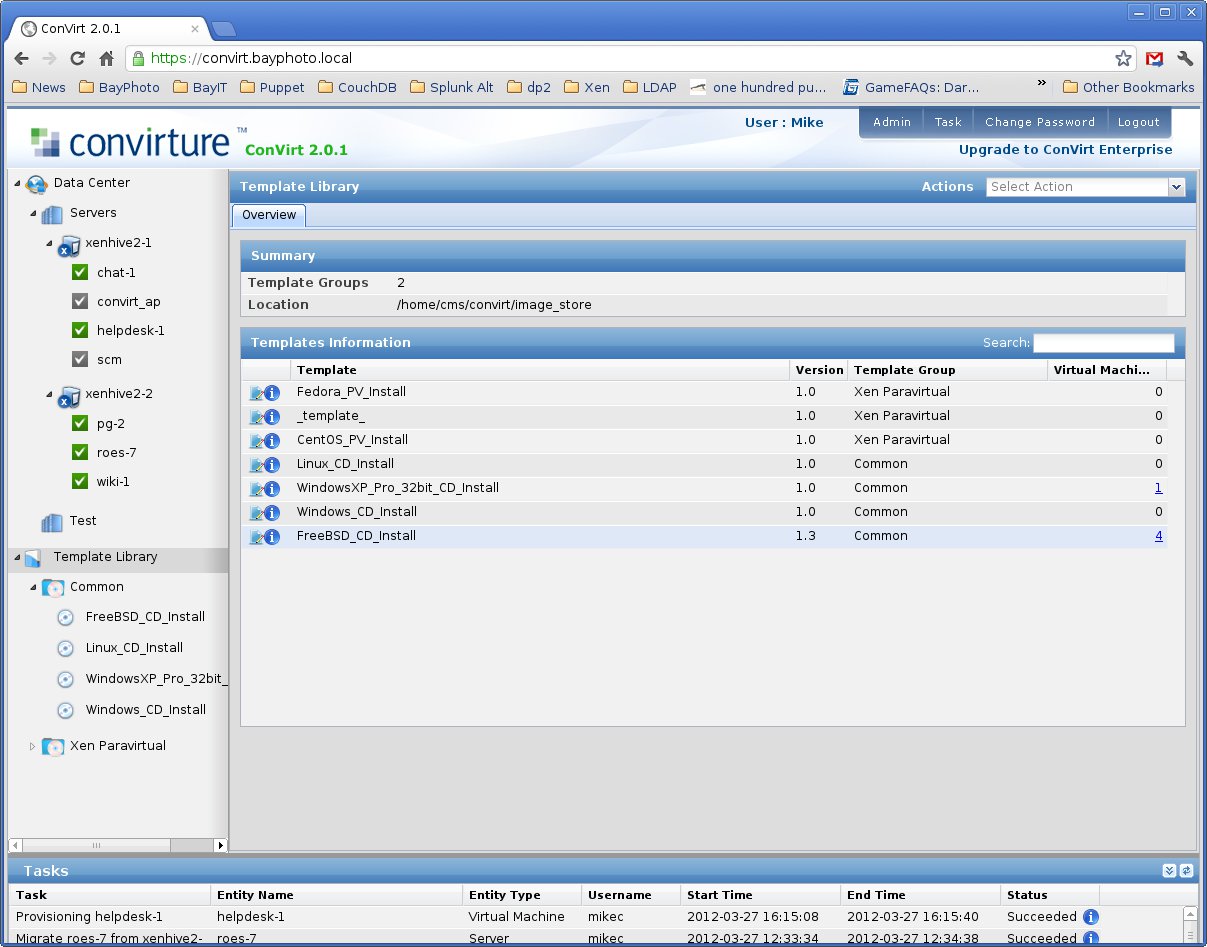

I came across Convirture, with has a free/open source edition of their hypervisor manager. Convirture uses libvirt, and will work with either KVM or Xen.

They also offer a nice Appliance you can download and run “xm create” on one of your hypervisor servers. After messing around with rolling my own version, I decided that the virtual appliance was much better to use.

todo

FYI: The VM’s that are greyed out are VMs that were created outside of Convirture. I had created one VM on the new Xen cluster before Rob had created the new FreeBSD Template to install from. I’ll have to convert it at some point.

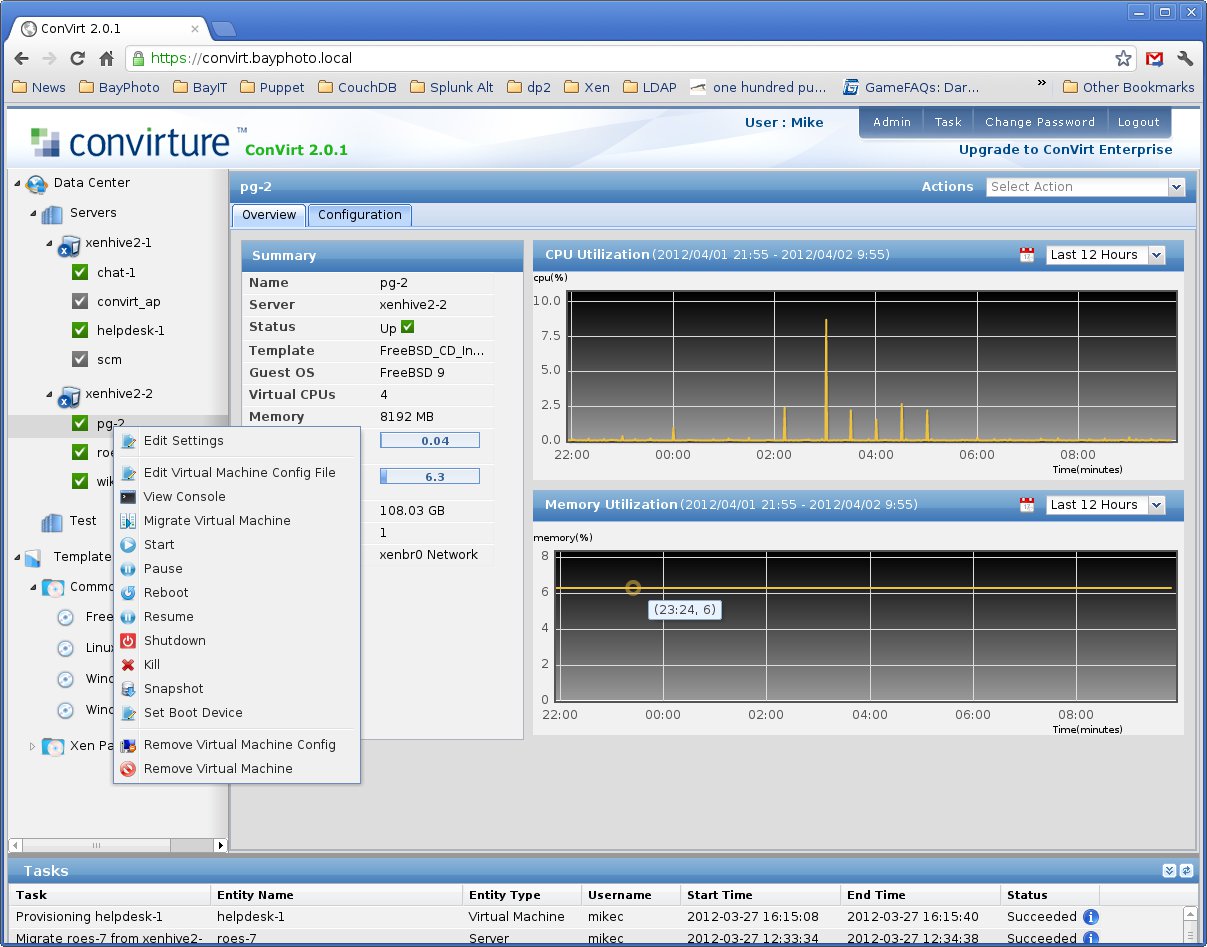

todo

todo

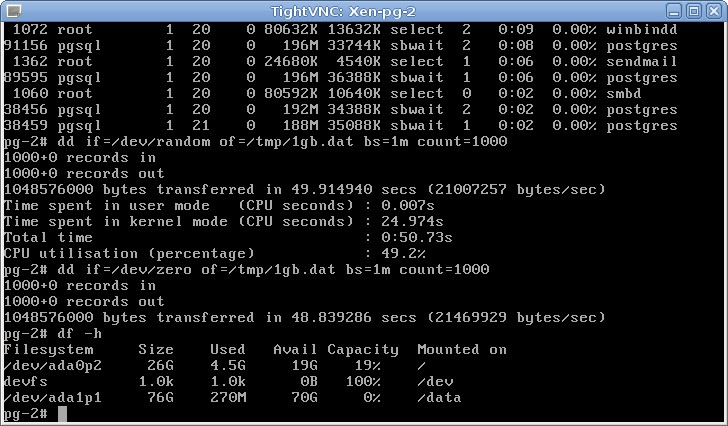

You can either choose to launch the Java applet (works great on Windows), or, you can choose to use your own VNC client. Since I decided not to install Java on my FreeBSD Desktop, I chose to connect with my own VNC viewer. I simply open up a Shell after I tell Convirture to connect me to a console and type:

mikec@b-bot ~> vncviewer convirt.bayphoto.local:6900And I’m presented with this wonderful window:

todo

From there, I can install portinstall + puppet and then its all up to our node definition to do the rest.

There are a lot of things you can do with the Open Source version, and after some configuration file tuning, I was able to get Live Migrations to work.

Templates are really easy to work with, and I think I’d like to get a base FreeBSD image created that already has Puppet installed and ready to go. That would make deploying new VM’s a lot faster. Same with Windows too.

Now the fun part… How much trouble was setting all of this up?

As I mentioned above, we use Xen here. To me at this point, it honestly doesn’t matter if KVM is heads over heels better than KVM. What matters is we already have a stable environment.

I tried various Distro’s: Debian, CentOS, Fedora and Scientific Linux

I also tried Two major kernel branches: 3.2 and 2.6.32

There was a problem getting GlusterFS to work on Debian versus Fedora, and Fedora is a KVM environment, and I wanted to jump through as little hoops as possible.

It took a few days to notice, but GlusterFS would not work reliably on 3.2, the system would simply reboot when I tried to write data out to the gluster filesystem. No warning, no nice error log, nothing.

In the end, I fell back to a RHEL based Distro (Scientific Linux), as Gluster seems to work well with a RHEL based OS. Also, since my Puppet manifests already have CentOS, it was easy for me to shove Scientific Linux in those modules.

I also fell back to using the 2.6.32 kernel, which is also why I was unable to use Debian. It only had Xen 4 and 3.2 kernels to choose from.

Scientific Linux is RHEL based, so it has KVM packages. Not Xen packages. So, I followed this guide: http://www.crc.id.au/xen-on-rhel6-scientific-linux-6-centos-6-howto/

Then I found out Libvirt does not have Xen support build in, so I had to use this guide as well: http://wiki.xensource.com/xenwiki/RHEL6Xen4Tutorial

Also, Scientific Linux strayed from the RHEL/Linux ethernet device convention, ethX, to emX (what would have been eth0 is now em0). This caused a little hiccup with the configuration I was managing. It was a small annoyance.

Here is my main beef: I tried everything I could to stick with a stock OS that met the following requirements:

- Xen 4.x

- GlusterFS support

That was shockingly difficult to do. In the end I had to re-compile a few packages, and I still get these fun messages:

brctl show

bridge name bridge id STP enabled interfaces

virbr0 /sys/class/net/virbr0/bridge: No such file or directory

/sys/class/net/virbr0/bridge: No such file or directory

/sys/class/net/virbr0/bridge: No such file or directory

/sys/class/net/virbr0/bridge: No such file or directory

/sys/class/net/virbr0/bridge: No such file or directory

/sys/class/net/virbr0/bridge: No such file or directory

/sys/class/net/virbr0/bridge: No such file or directory

/sys/class/net/virbr0/bridge: No such file or directory

/sys/class/net/virbr0/bridge: No such file or directory

/sys/class/net/virbr0/bridge: No such file or directory

/sys/class/net/virbr0/bridge: No such file or directory

/sys/class/net/virbr0/bridge: No such file or directory

8000.52540043692c yes virbr0-nic

xenbr0 /sys/class/net/xenbr0/bridge: No such file or directory

/sys/class/net/xenbr0/bridge: No such file or directory

/sys/class/net/xenbr0/bridge: No such file or directory

/sys/class/net/xenbr0/bridge: No such file or directory

/sys/class/net/xenbr0/bridge: No such file or directory

/sys/class/net/xenbr0/bridge: No such file or directory

/sys/class/net/xenbr0/bridge: No such file or directory

/sys/class/net/xenbr0/bridge: No such file or directory

/sys/class/net/xenbr0/bridge: No such file or directory

/sys/class/net/xenbr0/bridge: No such file or directory

/sys/class/net/xenbr0/bridge: No such file or directory

/sys/class/net/xenbr0/bridge: No such file or directory

8000.d067e5fbefa7 no em1

tap39.0

tap40.0

tap41.0

tap43.0

vif39.0

vif40.0

vif41.0

vif43.0New RHEL 6 based OS’s have support for IGMP inspection, and bridge-utils does not have the proper support for this. Its annoying, and it took up a good day of research to come to a reasonable resolution.

With all of this, I would have really been pleased if BHyVe (“Bee hive”, the BSD hypervisor project) was ready and at production quality.

All of these little issues I had with different kernel, base packages, vendor packages, are just par for the course when dealing with Linux. It can sometimes be mindbogglingly frustrating to deal with such a chaotic environment when you come from a very stable and well documented environment like FreeBSD.

FreeBSD lacks support, and that is for various reasons. The biggest is FreeBSD has less of a market share, a smaller developer and user community, and very VERY little marketing. Linux is still the right tool for this job, but what a sloppy tool it can be. Maybe in a few years, BHyVe will be a viable alternative.

In the end, I’m happy with this environment. I think Xen is great for our environment and works for our needs. Plus, the cost of doing business is much lower when we utilize free software. We can feasibly deploy 32 dual cpu VM’s with 8GB ram each for what costs us about $400 per instance. That is being overly generous too!