freebsd and multipath

2010-06-24

I didn’t find any blog posts of discussions on FreeBSD and multipath (for storage) that wasn’t a man page.

That means it is up to me to write about it :)

Hardware

CPU

Machine class: amd64

CPU Model: Intel(R) Xeon(R) CPU E5530 @ 2.40GHz

No. of Cores: 16

Memory

Total real memory available: 65511 MB

Logically used memory: 3945 MB

Logically available memory: 61565 MB

Storage

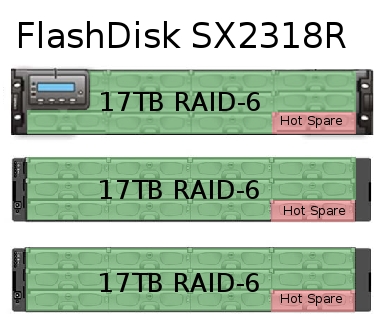

The storage is a large ~90TB Enterprise class Fibre Channel array, a Data Direct Networks S2A9900. Connected to that are two, dual port QLogic 2532 8Gb HBA’s. We also have two SSD drives (configured as a RAID1 device) for the ZFS Intent Log.

The storage array was configured from 120 1TB, 7200RPM Hitachi drives. It has 12 volumes in total, composed of 10 of the SATA drives (1 parity, 1 Spare), or ~7TB.

The S2N9900 has two controllers, one controller is responsible for LUN’s 1-6, the other controller is responsible for LUN’s 7-12. every LUN is presented to all four Fibre Channel ports. This got a little messy, trying to sort out 48 raw disk devices takes some patience and a decent attention span…

yeah, I did make a few typo’s here and there, thankfully creating and clearing disk labels is easy.

# camcontrol devlist|grep lun\ 0

at scbus0 target 0 lun 0 (pass0,da0)

at scbus1 target 0 lun 0 (pass6,da6)

at scbus4 target 0 lun 0 (pass24,da24)

at scbus5 target 0 lun 0 (pass30,da30)

# camcontrol inquiry da0 -S

108EA1B10001

# camcontrol inquiry da6 -S

108EA1B10001

# camcontrol inquiry da24 -S

108EA1B10001

# camcontrol inquiry da30 -S

108EA1B10001

# gmultipath label -v DDN-v00 /dev/da0 /dev/da6 /dev/da24 /dev/da30

Done.

# gmultipath status

Name Status Components

multipath/DDN-v00 N/A da0

da6

da24

da30

Now, to do that 12 more times…

Whew, hard work!

Now, to create a simple ZFS volume across all 12 luns:

# zpool create zfs multipath/DDN-v00 multipath/DDN-v01 multipath/DDN-v02 multipath/DDN-v03 multipath/DDN-v04 multipath/DDN-v05 multipath/DDN-v06 multipath/DDN-v07 multipath/DDN-v08 multipath/DDN-v09 multipath/DDN-v10 multipath/DDN-v11 log mfid1

# zpool status

pool: zfs

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

zfs ONLINE 0 0 0

multipath/DDN-v00 ONLINE 0 0 0

multipath/DDN-v01 ONLINE 0 0 0

multipath/DDN-v02 ONLINE 0 0 0

multipath/DDN-v03 ONLINE 0 0 0

multipath/DDN-v04 ONLINE 0 0 0

multipath/DDN-v05 ONLINE 0 0 0

multipath/DDN-v06 ONLINE 0 0 0

multipath/DDN-v07 ONLINE 0 0 0

multipath/DDN-v08 ONLINE 0 0 0

multipath/DDN-v09 ONLINE 0 0 0

multipath/DDN-v10 ONLINE 0 0 0

multipath/DDN-v11 ONLINE 0 0 0

logs ONLINE 0 0 0

mfid1 ONLINE 0 0 0

errors: No known data errors

Results

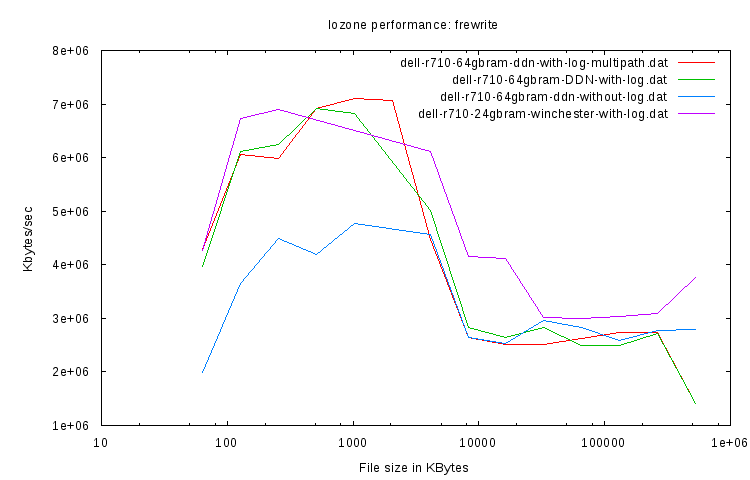

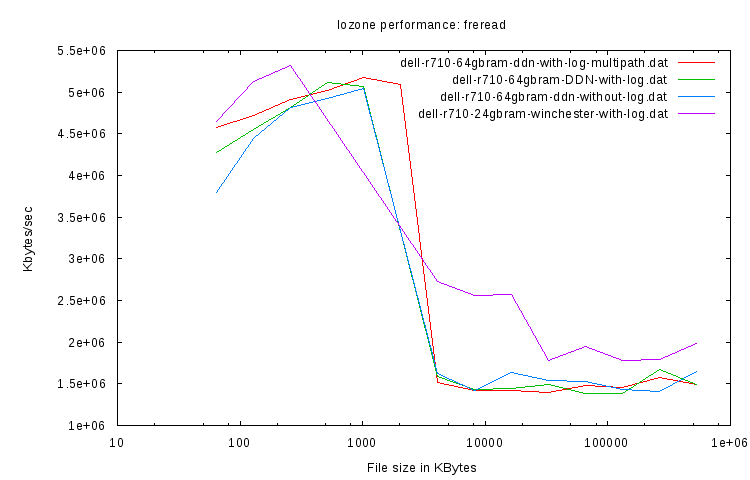

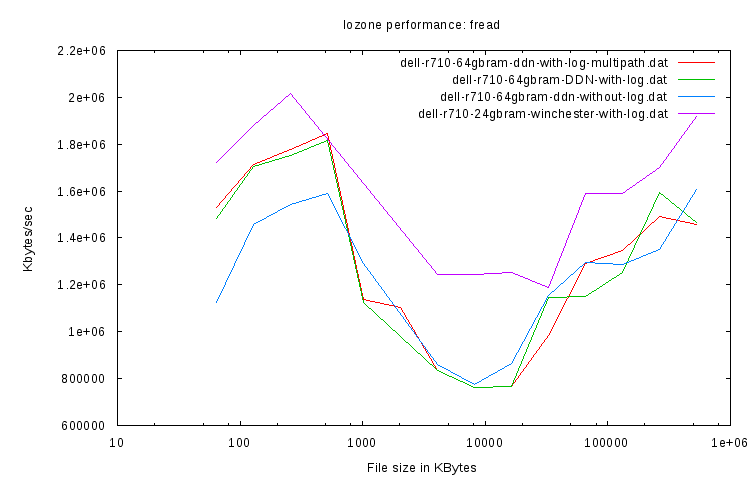

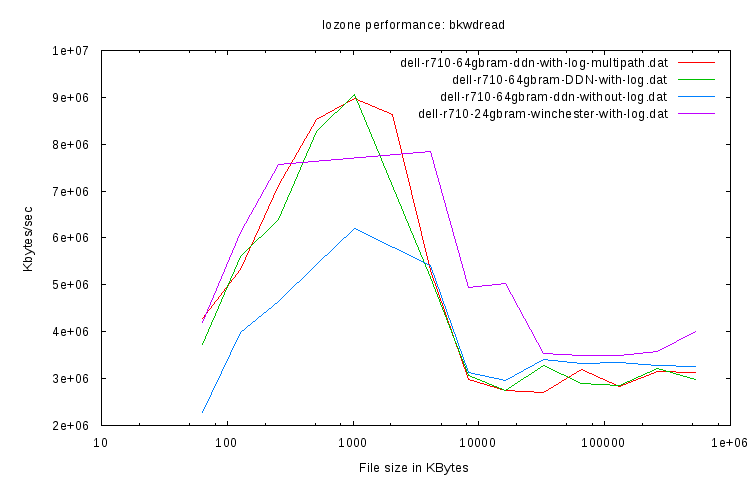

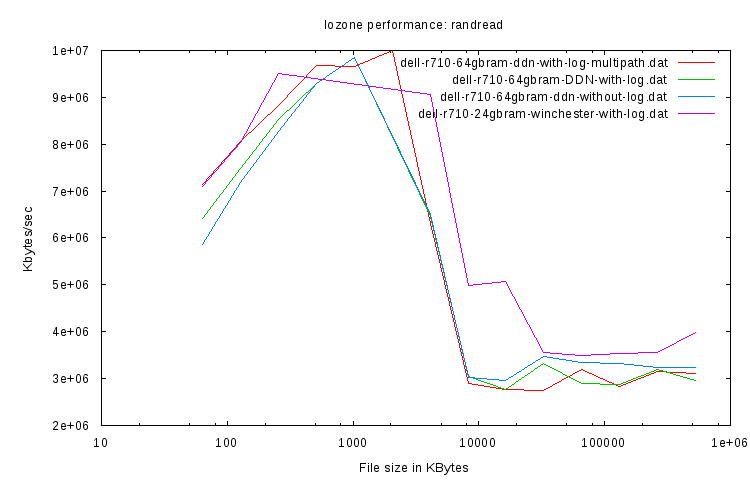

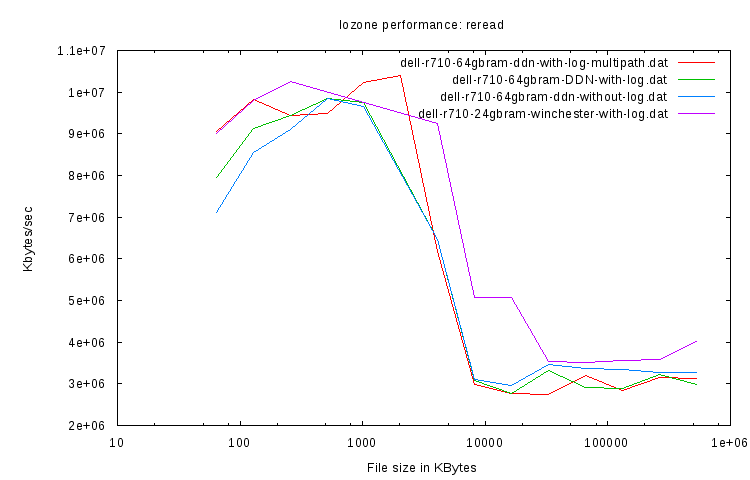

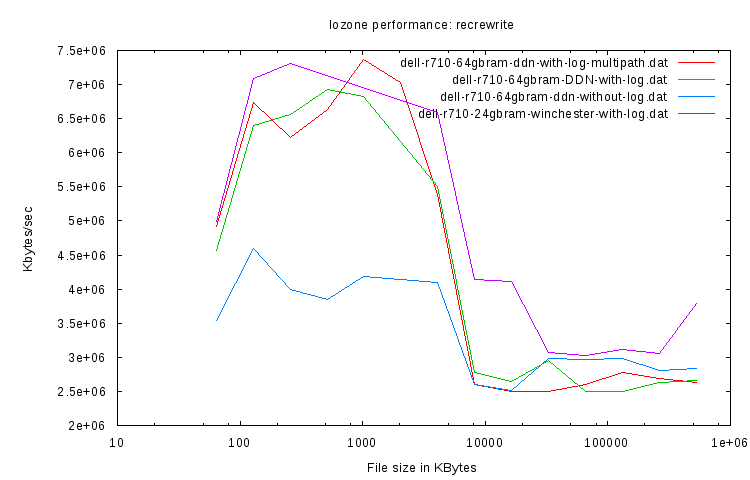

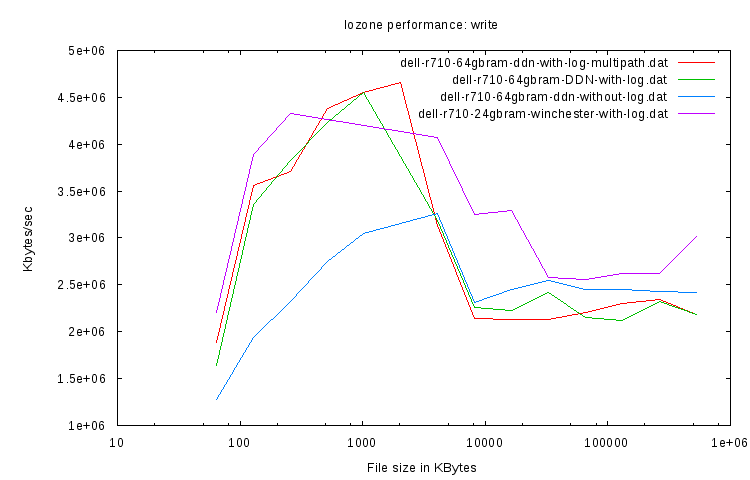

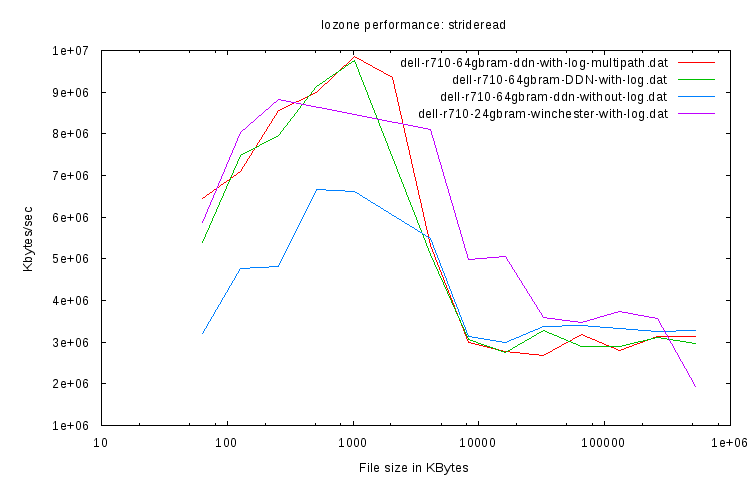

These results wre obtained from two similar servers. The other server is using a Winchester Systems Storage array, and has 24GB of system memory. The Winchester Storage is ~40TB of 2TB SATA disks:

todo

I used IOZone for the test (iozone -a). The default iozone test is using 64k files to 512MB files, and since I’m trying to see how the server might actually react to the real worl, I’m okay with this (ie, I fully understand that a LOT of caching is taking place, and I want that for right now).

todo

todo

todo

todo

todo

todo

todo

todo

todo

The S2N9900 is a pretty nice device. Although you have to use TELNET (yeesh, couldn’t they spend a few more bucks on a small ARM processor and use ssh?), the controllers have a decent command line environment with HELP pages. What is also nice is the company provides the documentation for their products for free, and no registration is required. Good Job!

As far as raw read and write speeds, that is hard to nail down. I’ve been using IOZone, and when I run that, and take a look at ‘zpool iostat 1’, the ZFS Pool stays at a constant 200MB/sec for writes. I’ve seen in pop up higher, like 250MB to 500MB, but 200 seems to be the ceiling. I’ve done with and without a dedicated log device, with and without gmultipath, and finally, using the SSD RAID1 as a L2ARC cache device. All results are nearly identical. Reads are pretty crazy though, with 64GB of system memory, reading a file is nearly 1GB/sec.